So based on #2 and #3 we can estimate the size of the dimension.If your country dimension has 200 rows, about 0.2 rows (0.1%) are updated every day, which means that 1 row per week on average. So if your customer dimension has 10 million rows, about 300k rows (3%) are updated or inserted every day. 3% for a very active dimension (like customer and product dimensions), 0.1% for very static dimension (like country and currency dimensions).

#DIMENSION TABLE UPDATE#

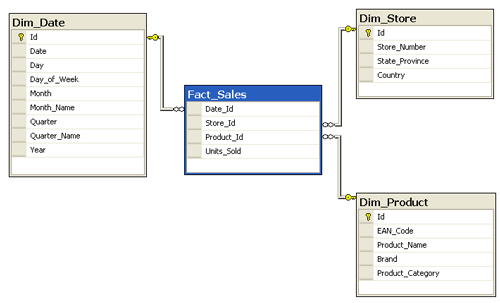

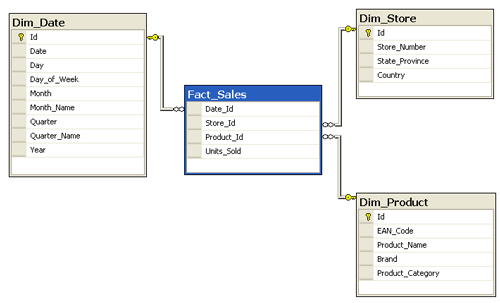

About 0.1-3% of the rows in the dimension tables are update or inserted every day. This is the volume dimension data uploaded to the data warehouse every single day. This is because most cases are 5%, only a few cases it is 10%. If you are not sure which number to take between 5% and 10%, take 5%. Of all date going through the ETL process, about 5-10% is dimension/static data, and 90-95% is fact/transaction data. This 15 GB is the total volume of data extract from all source systems, which is usually about 5-10% of the size of the source system, if we can do incremental extraction (use 50% if you can’t do extract the data incrementally). Total data volume each day loaded by ETL into the data warehouse from all source systems: 15 GB, comprised of say 13 GB delta load (incremental extract / transactional) and 2 GB static load (whole-table extract). We can then cross check this 44 GB using the daily volume of dimension data flowing into the data warehouse, to verify that we are not too far off from the reality. Then we multiply with the number of dimensions for each of the Small/Medium/Large category, like this: We can roughly estimate the size of a small/medium/large dimension based on the KB per row, like this: Up to 1000 rows is small, 1000 to 100k is medium, and > 100k rows is large dimension. OK let’s start with dimension tables, then the fact tables.Ĭategorise dimension tables into small, medium, and large, based on how many rows in the dimension. Of course, other DW architects may have different experience, and if so, please share it so we can all learn from you as well. designing and building data warehouses and BI, and what I wrote here is based on that experience. I’ve done about 10 projects of data warehousing, i.e.

This way you can estimate the disk requirement, which is one of the most difficult thing to do, if you don’t have the experience. Once you get that, you can estimate the size of the staging database based on the warehouse size (say 30-50% of DW size), size of the cubes (say 5-10% of DW size), size of the extract files (say 10-20% of DW size). The problem is, we do not know the size of the warehouse until we finished building the warehouse and ETL.īelow I describe the technique that I used to estimate the size of dimension and fact tables. In most cases, when we started designing a data warehouse, as a data warehouse architect the PM needs us to tell them what the size of the DW will be, so that infrastructure can start the procurement process, capacity planning, etc.

0 kommentar(er)

0 kommentar(er)